AWS and Go

How AWS and Go Transformed a Public Broadcasters Digital Archive

Who are you?

"Officially" technical lead at Kablamo read "code monkey".

```func produce(c Coffee, b Beer) (Code, Cloud, error)```

I blog boyter.org I free software github/boyter/ I run searchcode.com also on the twitter @boyter

Problem / Opportunity

- 250,000 audio and video files starting in 1920's stored in digitised system

- Video stored in proprietary format.

- System was full. No ability to archive new content

Problem / Opportunity

- Metadata was still being added

- Many systems for metadata. Some 36+ years old.

- 8-12 weeks to learn to search, FQM.

- 2 week turnaround for content.

The Goal

- Search across everything

- Have a "future resistant" archive.

- Merge metadata into golden records. (Customer)

The Goal

- Self serve.

- Turn off old services

- Be production ready in 6 months

- +10 PB total data (?) eventually... (Disks, Tape etc..)

Tools

- AWS (naturally)

- S3 all the things.

- Elastic search

- React/Typescript

- No choice of language. Go.

- O365 for Authentication.

Architecture

Choices

- 3 AZ ECS cluster

- 2 R-type instances per AZ. (Memory)

- Elastic search for search + cache.

- SQS / Step functions?

- RDS.

- Public / Private?

- V4 Signed Upload 700 GB files!

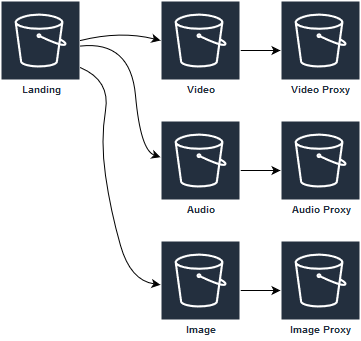

- Landing Bucket.

- Glacier

Landing

Retention policy on landing.

Resilient Design

Degrade Gracefully.

- S3

- Unable to add/edit records or download/view

- SQS

- Unable to add/edit records with new media

- Elastic Search

- Unable to search

- RDS

- Unable to view/edit/delete collections

Catch! Hides errors! Delete issue.

AWS Elastic Search?

It's not brilliant

- Choice of instance types limited

- No scaling or monitoring work done for you

- Forces you to snapshot even if you use it as a cache/search only solution!! (Day)

- Multi AZ limited

- Tended to have issues under load.

Why Elastic search?

- Query syntax

"melbourne~1 -melbourne" - Performance

- Power users requested VERY complex queries (FQM)

- Resilience

Elastic Search Issues

Main one. Queries across fields.

```

{

"query_string": {

"default_operator": "AND",

"fields": [

"person.name",

"fact",

"person.citizenship"

],

"query": "keanu canada"

}

}

```

Library support in Go not great. Stemming. Sorting. CPU bound.

GOPATH + Monorepo

Exploit GOPATH for multiple entry points into application.

```.

├── assets

│ ├── imageproxy

│ │ └── main.go

│ ├── load

│ │ └── main.go

│ ├── merge

│ │ ├── audio

│ │ │ └── main.go

│ │ ├── bulk

│ │ │ └── main.go

│ │ ├── cleanup

│ │ │ └── main.go

│ │ ├── oldvideo

│ │ │ └── main.go

│ │ ├── photo

│ │ │ └── main.go

│ │ ├── video

│ │ │ └── main.go

│ │ └── wvideo

│ │ └── main.go

│ ├── transcodeFinished

│ │ └── commandline

│ │ └── main.go

│ └── transcodeStart

│ └── commandline

│ └── main.go

```

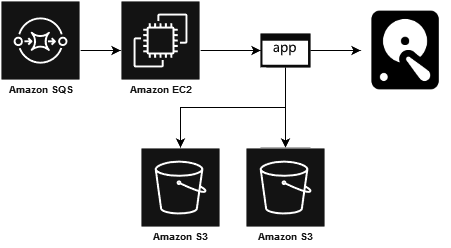

Background Jobs

- Load. Taxonomy. Graceful degrade. Restore.

- Merge's.

- Transcode Start/End.

- Image Resize, watermark.

- Many one off jobs.

- Lambda?

- Go memory usage a massive win.

Go for S3 copy

Excellent with SQS support. But have to code own multipart.

```

for _, sqsmsg := range messages.Messages {

// We don't want to wait for these to finish anymore but let them run in the background

// and finish whenever they are done and naturally exit. As such no need for a waitgroup

// here anymore

go func(sqsmsg *sqs.Message) {

```

Go Image Resizing

- Don't do it at runtime

- Too slow! Watermarking.

- Moved to pre-generating and storing.

- API passes images though. Logging.

- Thumbor is pretty good

Clipping

- mediainfo, ffmpeg (mxf, mov, mp4).

- Disk caching/issue.

- 2 random eviction (woo!)

- AWS Transcode? Speed/Cost.

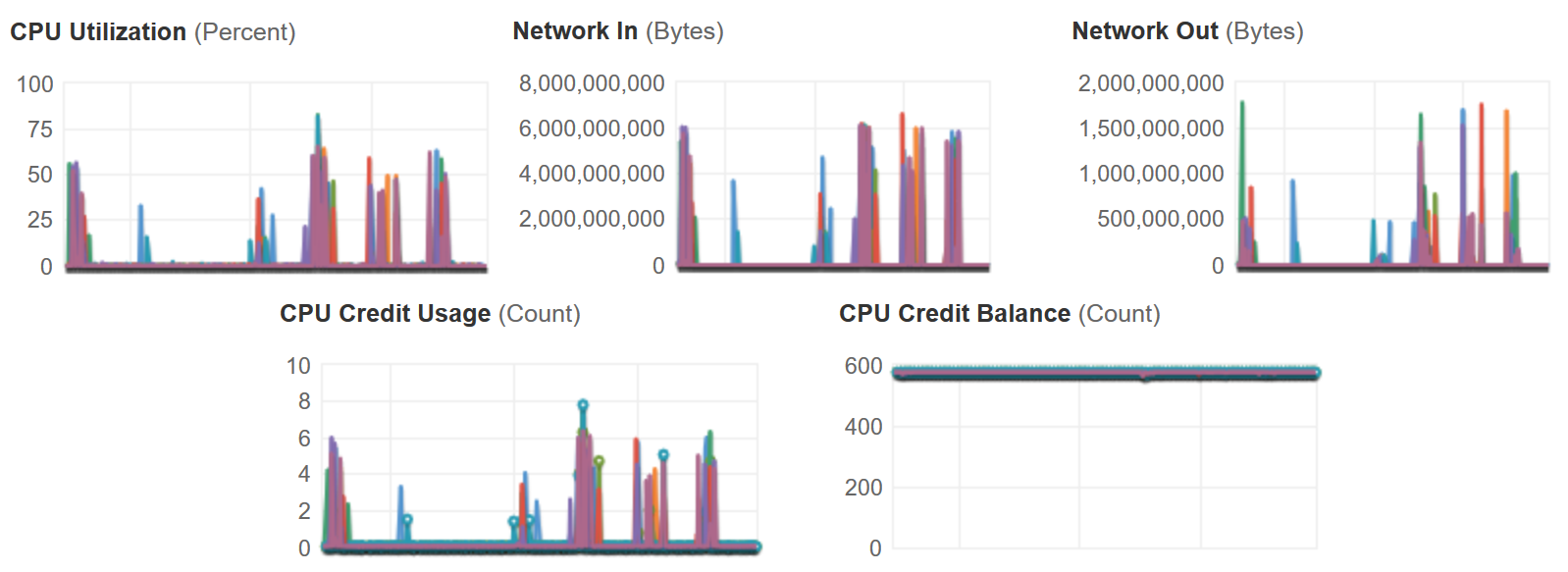

Clipping In Action

Network bound. T3 burstable network really helps!!

Glacier

Annoying when many can request. Store in DB and on event update all matches. Expire after 24 hours. Hard to predict expiration.

Really Helpful

Have an endpoint that exposes most environment variables.

```{

"environment": {

"AppEnvironment": "PROD",

"AudioMasterBucket": "archives.master.audio",

"AudioProxyBucket": "archives.proxy.audio",

"AwsRegion": "ap-southeast-2",

"DownloadExpiryMinutes": 1440,

"ElasticEndpoint": "https://elastic-archives.content/",

"FrontendEndpoint": "https://archive.content",

"HttpTimeoutSeconds": 20,

"LandingBucket": "archives.landing",

"MetadataBucket": "archives.records.prod",

"PhotoBucket": "archives.photo",

"PhotoBucketProxy": "archives.proxy.photo",

"PortNumber": 8080,

"SystemEnvironment": "Archive",

"SystemEnvironmentDisplayName": "Archive",

"UploadExpiryMinutes": 1440,

"VideoMasterBucket": "archives.master.video",

"VideoProxyBucket": "archives.proxy.video"

}

}```

What would we change?

AWS Changes, Fargate (CPU), Private API-Gateway, Server-less Aurora, Bucket cleaning, Instance types R5

Taxonomy storage.

Probably more lambda. 15 min timeout.

S3 key names. Maybe GORM. Proxy!

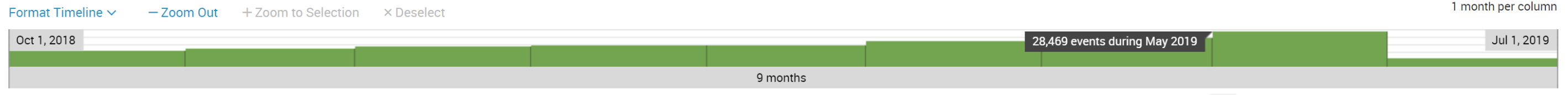

Results

- Outages 0. Previously days/weeks.

- 976 audio master retrievals

- 4,008 video master retrievals. Glacier.

- 16,014 audio proxies played

- 157,281 video proxies played (28,469 in May)

- 593,085 searches performed

- Average search time ~100 ms

GA Jan. Culture change.

Results Continued

- 3 months to production cut-over. Turn off.

- 132 TB data though API (so far)

- 1 PB of video data in S3 / Glacier

- 40 TB of audio data in S3

- 16,000 images

- ~327 days of video watching

- Some joker... 60x700 GB videos in one hour.

- Importing other systems.

- On time + budget!

Thank You!

Presentation located at https://boyter.org/static/aws-go-archive-presso/ or just go to boyter.org and I will link it up tomorrow.